Evaluation will be done by comparing the automatically generated results to the results generated by manual annotation of experts.

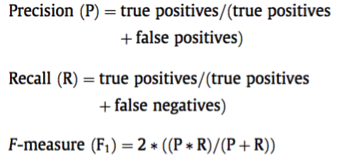

The primary evaluation metric for all three sub-tracks will consist of micro-averaged precision, recall and F1-scores:

The used evaluation scripts together with a Readme file with instructions will be available on GitHub to enable systematic fine-tuning and improvement of results on the provided training/development data using by participating teams.

For the CANTEMIST-CODING sub-track we also apply a standard ranking metric: Mean Average Precision (MAP) for evaluation purposes.

MAP (Mean Average Precision) is an established metric used for ranking problems.

All metrics will be computed including and excluding mentions with 8000/6 code.