LivingNER results will be posted here after the SEPLN 2022 conference

Submission

Evaluation process: (1) you submit your results, (2) we perform the evaluation off-line and (3) return the final scores.

Submission format

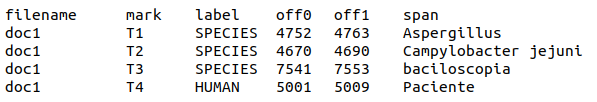

LivingNER – Species NER track. A TSV file with one row per mention, headers and the following columns:

- filename

- mark

- label (mention label)

- off0 (starting character offset)

- off1 (ending character offset)

- span (mention span)

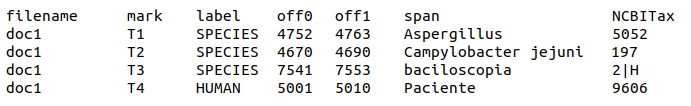

LivingNER – Species Norm track. A TSV file with one row per mention, headers and the following columns:

- filename

- mark

- label (mention label)

- off0 (starting character offset)

- off1 (ending character offset)

- span (mention span)

- NCBITax (mention code in NCBITaxonomy)

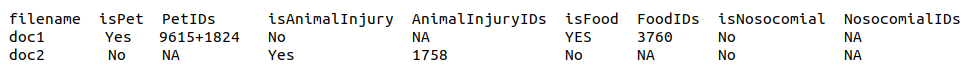

LivingNER – Clinical IMPACT track. A TSV file with one row per document, headers and the following columns:

- filename

- isPet (Yes/No)

- PetIDs (NCBITaxonomy codes of pet & farm animals present in document)

- isAnimalInjury (Yes/No)

- AnimalInjuryIDs (NCBITaxonomy codes of animals causing injuries present in document)

- isFood (Yes/No)

- FoodIDs (NCBITaxonomy codes of food mentions present in document)

- isNosocomial (Yes/No)

- NosocomialIDs (NCBITaxonomy codes of nosocomial species mentions present in document)

To evaluate your systems AFTER June 2022, do it in Codalab:

The following sections are kept here for historical reasons. They are not relevant AFTER June 2022

Submission method

Submissions will be made via SFTP.

Submission instructions

5 submissions per sub-track will be allowed.

You must submit ONE SINGLE ZIP file with the following structure:

- One subdirectory per subtask in which you are participating.

- In each subdirectory, you must include the results in a TSV file with the format defined in the “Submission format” section.

- The TSV file must have the .tsv file extension and include ALL your predictions.

- If you have more than one system, you can include their predictions and we will evaluate them (up to 5 prediction runs). Use separate files for each run, with numbers and a recognizable name. For example, 1-systemDL and 2-systemlookup.

- In addition, in the parent directory, you must add a README.txt file with your contact details (team name, affiliation, and authors) and a really short explanation of your system.

Evaluation Library

The LivingNER evaluation library is available on GitHub.

Please, make sure you have the latest version.

Evaluation process: (1) you submit your results, (2) we perform the evaluation off-line and (3) return the final scores.

These scripts are distributed as part of the LivingNER shared task. They are written in Python3 and intended to be run via command line:

$> python main.py -g ../gs-data/sample_entities_subtask1.tsv -p ../toy-data/sample_entities_subtask1_MISSING_ONE_FILE.tsv -s ner $> python main.py -g ../gs-data/sample_entities_subtask2.tsv -p ../toy-data/sample_entities_subtask2_MISSING_ONE_FILE.tsv -s norm $> python main.py -g ../gs-data/sample_entities_subtask3.tsv -p ../toy-data/pred_sample_entities_subtask3.tsv -s app

They produce the evaluation metrics for the corresponding sub-tracks: precision, recall and F-score for LivingNER Species NER and LivingNER Species NORM.

Example

Evaluation process: (1) you submit your results, (2) we perform the evaluation off-line and (3) return the final scores.

- LivingNER-Species NER

$ cd src

$ python main.py -g ../gs-data/sample_entities_subtask1.tsv -p ../toy-data/sample_entities_subtask1_MISSING_ONE_FILE.tsv -s ner

According to file headers, you are on subtask ner

According to file headers, you are on subtask ner

-----------------------------------------------------

Clinical case name Precision

-----------------------------------------------------

32032497_ES nan

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Clinical case name Recall

-----------------------------------------------------

32032497_ES 0.0

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Clinical case name F-score

-----------------------------------------------------

32032497_ES nan

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Micro-average metrics

-----------------------------------------------------

Micro-average precision = 1.0

Micro-average recall = 0.9568

Micro-average F-score = 0.9779

../toy-data/sample_entities_subtask1_MISSING_ONE_FILE.tsv|1.0|0.9568|0.9779

- LivingNER-Species Norm

$ cd src

$ python main.py -g ../gs-data/sample_entities_subtask2.tsv -p ../toy-data/sample_entities_subtask2_predictions.tsv -s norm

According to file headers, you are on subtask norm, GS file

According to file headers, you are on subtask norm, predictions file

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/ann_parsing.py:46: UserWarning: There are duplicated entries in ../toy-data/sample_entities_subtask2_predictions.tsv. Keeping just the first one...

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/ann_parsing.py:59: UserWarning: Lines 1 in ../toy-data/sample_entities_subtask2_predictions.tsv contain unvalid codes. Valid codes are those that appear in ../ncbi_codes_unique.tsv. Ignoring lines with valid codes...

-----------------------------------------------------

Clinical case name Precision

-----------------------------------------------------

32032497_ES 0.5

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Clinical case name Recall

-----------------------------------------------------

32032497_ES 0.3333

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Clinical case name F-score

-----------------------------------------------------

32032497_ES 0.4

-----------------------------------------------------

caso_clinico_medtropical54 1.0

-----------------------------------------------------

casos_clinicos_infecciosas1 1.0

-----------------------------------------------------

casos_clinicos_infecciosas141 1.0

-----------------------------------------------------

cc_onco908 1.0

-----------------------------------------------------

-----------------------------------------------------

Micro-average metrics

-----------------------------------------------------

Micro-average precision = 0.9854

Micro-average recall = 0.9712

Micro-average F-score = 0.9783

../toy-data/sample_entities_subtask2_predictions.tsv|0.9854|0.9712|0.9783

- LivingNER-Clinical IMPACT

$ cd src

$ python main.py -g ../gs-data/sample_subtask3.tsv -p ../toy-data/sample_subtask3_predictions.tsv -s app

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/ann_parsing.py:99: UserWarning: Lines 5 in ../toy-data/sample_subtask3_predictions.tsv contain unvalid codes. Valid codes are those that appear in ../ncbi_codes_unique.tsv. Ignoring lines with valid codes...

Basic metrics (not taking into account NCBI codes, just Y/N assignment)

-----------------------------------------------------

Pet

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

AnimalInjury

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

Food

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

Nosocomial

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/livingner_app.py:90: UserWarning: Precision score automatically set to zero because there are no predicted positives

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/livingner_app.py:104: UserWarning: Global F1 score automatically set to zero for simple metrics to avoid division by zero

/home/antonio/Documents/Work/BSC/Projects/micro/scripts/livingner-evaluation-library/src/livingner_app.py:110: UserWarning: Global F1 score automatically set to zero for complex metrics to avoid division by zero

Precision = 0

Recall = 0.0

F1score = 0

-----------------------------------------------------

Complex metrics (taking into account NCBI codes)

-----------------------------------------------------

Pet

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

AnimalInjury

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

Food

Precision = 1.0

Recall = 1.0

F1score = 1.0

-----------------------------------------------------

Nosocomial

Precision = 0

Recall = 0.0

F1score = 0

-----------------------------------------------------Publications

Participant papers at CEUR: http://ceur-ws.org/Vol-3202/

Instructions for the working notes papers:

- System description papers should be formatted according to the uniform 1-column CEURART style. Latex and Word templates can be found in: http://ceur-ws.org/HOWTOSUBMIT.html#PREPARE

- The minimum length of a regular paper should be 5 pages. There is no maximum page limit.

- Papers must be written in English.

- Each paper must include a copyright footnote on the first page of each paper: {\let\thefootnote\relax\footnotetext{Copyright \textcopyright\ 2022 for this paper by its authors. Use permitted under Creative Commons License Attribution 4.0 International (CC BY 4.0). IberLEF 2022, September 2022, A Coruña, Spain.}}

- Eliminate the numbering in the pages of the paper, if there is one, and make sure that there are no headers or footnotes, except the mandatory copyright as a footnote on the first page.

- Authors should be described with their name and their full affiliation (university and country). Names must be complete (no initials), e.g. “Soto Pérez” instead of “S. Pérez”.

- Titles of papers should be in emphatic capital English notation, i.e., “Filling an Author Agreement by Autocompletion” rather than “Filling an author agreement by autocompletion”.

- At least one author of each paper must sign the CEUR copyright agreement. Instructions and templates can be found at http://ceur-ws.org/HOWTOSUBMIT.html. The signed form must be sent along with the paper to the task organizers. Important: it must be physically signed with pencil on paper.

Papers without the copyright footnote, with page numbers, without the CEUR copyright agreement properly signed will not be considered.

Submit your paper at EasyChair. See here the submission procedure

Have a look at 2021 Meddoprof proceedings (here) and 2020 Cantemist proceedings (here).

Relevant publications

- Gerner, Martin, Goran Nenadic, and Casey M. Bergman. “LINNAEUS: a species name identification system for biomedical literature.” BMC bioinformatics 11.1 (2010): 1-17.

- Federhen, Scott. “The NCBI taxonomy database.” Nucleic acids research 40.D1 (2012): D136-D143.

- Evangelos Pafilis, Sune P. Frankild, Lucia Fanini, Sarah Faulwetter, Christina Pavloudi, Aikaterini Vasileiadou, Christos Arvanitidis, and Lars Juhl Jensen. 2013. The species and organisms resources for fast and accurate identification of taxonomic names in text. PLOS ONE, 8(6):1–6

- Schoch, Conrad L et al. “NCBI Taxonomy: a comprehensive update on curation, resources and tools.” Database : the journal of biological databases and curation vol. 2020 (2020): baaa062. doi:10.1093/database/baaa062

- Antonio Miranda-Escalada, Eulàlia Farré-Maduell, Martin Krallinger. Named Entity Recognition, Concept Normalization and Clinical Coding: Overview of the Cantemist Track for Cancer Text Mining in Spanish, Corpus, Guidelines, Methods and Results. Proceedings of the Iberian Languages Evaluation Forum (IberLEF 2020), CEUR Workshop Proceedings. 303-323 (2020).

- Lima-López, Salvador, Eulàlia Farré-Maduell, Antonio Miranda-Escalada, Vicent Brivá-Iglesias, & Martin Krallinger. “NLP applied to occupational health: MEDDOPROF shared task at IberLEF 2021 on automatic recognition, classification and normalization of professions and occupations from medical texts.” Procesamiento del Lenguaje Natural [Online], 67 (2021): 243-256.

- Antonio Jimeno Yepes, Ameer Albahem, and Karin Verspoor. 2021. Using Discourse Structure to Differentiate Focus Entities from Background Entities in Scientific Literature. In Proceedings of the The 19th Annual Workshop of the Australasian Language Technology Association, pages 174–178, Online. Australasian Language Technology Association.

- Pyysalo, Sampo, et al. “Overview of the infectious diseases (ID) task of BioNLP shared task 2011.” Proceedings of BioNLP Shared Task 2011 Workshop. 2011.

Workshop

LivingNER will be part of the IberLEF (Iberian Languages Evaluation Forum) 2022 evaluation campaign at the SEPLN 2022 (38th Annual Congress), that takes place in September at A Coruña (Spain)

IberLEF aims to foster the research community to define new challenges and obtain cutting-edge results for the Natural Language Processing community, involving at least one of the Iberian languages: Spanish, Portuguese, Catalan, Basque or Galician. Accordingly, several shared-tasks challenges are proposed.

LivingNER participants will have the opportunity to publish their system descriptions at the IberLEF proceedings.

Also, LivingNER participants will be selected to present their system descriptions at the IberLEF 2022 workshop (Sept 2022).

Have a look at the 2020 Cantemist presentations (here) and 2021 Meddoprof presentations (here)

FAQ

Email your doubts to Antonio Miranda (antoniomiresc@gmail.com)

- Q: What is the goal of the shared task?

The goal is to predict the annotations (or codes) of the documents in the test and background sets. The goal of the subtask 2 (CLINICAL IMPACT) is to predict the document category and the evidence for those categories - Q: How do I register?

Here: https://temu.bsc.es/livingner/2022/01/28/registration/ - Q: How to submit the results?

We will provide further information in the following days.

Download the example ZIP file.

See Submission page for more info. - Q: Can I use additional training data to improve model performance?

Yes, participants may use any additional training data they have available, as long as participants describe it in the working notes. We will ask you to summarize such resources in your participant paper. - Q: The task consists of three sub-tasks. Do I need to complete all sub-tasks? In other words, If I only complete a sub-task or two sub-tasks, is it allowed?

Sub-tasks are independent and participants may participate in one, two, or the three of them. - Q: How can I submit my results? Can I submit several prediction files for each sub-task?

You will have to create a ZIP file with your predictions file and submit it to EasyChair (further details will be soon released).

Yes, you can submit up to 5 prediction files, all in the same ZIP.

Download the example ZIP file.

See Submission page for more info. - Q: Should prediction files have headings?

No, prediction files should have no headings. - Q: Are all codes and mentions equally weighted?

Yes. - Q: LivingNER-NORM and CLINICAL Impact. What version of the NCBI Taxonomy is in use?

We are using the latest version available in January 2021.

There is a complete list of the valid codes on Zenodo. Codes not present in this list will not be used for the evaluation. - Q: LivingNER-NORM and CLINICAL Impact. What is meant by the /H appended to various codes?

Some SPECIES mentions are more specific than the most granular term available in the NCBI Taxonomy. Then, we append /H to the code.

For example, “K pneumoniae BLEE” is not specified in the NCBI Taxonomy. But “Klebsiella pneumonia” is (code 573). Then, we assign 573|H. - Q: LivingNER-NORM and CLINICAL Impact. What do the codes separated by a “|” mean?

Some SPECIES mentions are only correctly described by a combination of NCBI Taxonomy codes. For instance, “virus B y C de la hepatitis” does not exist as such in NCBI Taxonomy. However, we may express it as a combination of the NCBI Taxonomy terms “Hepatitis B virus” (10407) and “Hepacivirus C” (11103). Then, we assign 10407|11103. - Q: LivingNER-NORM and CLINICAL Impact. If a predicted mention has several codes, do I need to provide them in some particular order?

No. The evaluation library accepts combined codes in any order. - Q. In LivingNER-App. When one of the entities results in a NOCODE (the placeholder for when the NCBI taxonomy does not contain any suitable code), this NOCODE marker should appear in the final output for subtrack3? Or should we totally omit them?

As an example, totally made up (see the bold part):

(1) caso_clinico_neurologia78 Yes NOCODE No NA Yes 9031+NOCODE No NA <– adding the NOCODE just like it was any other code

or

(2) caso_clinico_neurologia78 Yes NA No NA Yes 9031 No NA <— totally ignoring the NOCODES putting an NA when no other evidence is available despite the flag being “Yes”

The correct answer is the first one. As a general rule, every time you try to add an NCBI code but your mention is not in the scope of the terminology, you should add NOCODE. That is what we did in the manual annotation process (https://doi.org/10.5281/zenodo.6385162)

Schedule

UPDATED ON MAY, 3

| Status | Event | Date (all deadlines are 23:59 CEST) | Link |

|---|---|---|---|

| ✓ | Sample Set release | March, 11 | Sample set |

| ✓ | Train Set Release and guidelines publication | March, 18 | Training set & Guidelines |

| ✓ | Evaluation Library Release | March, 25 | Evaluation Library |

| ✓ | Development Set Release | March, 31 | Validation set |

| ✓ | Test and Background Set Release | April, 22 | Test+Background set |

| ✓ | Multilingual LivingNER corpus | May, 3 | Multilingual LivingNER corpus |

| ✓ | End of evaluation period. Predictions submission deadline | June, 12 UPDATED | Submission instructions |

| ✓ | Evaluation delivery | June, 17 UPDATED | TBD |

| ✓ | Working Notes deadline | June, 24 UPDATED | Easychair |

| Working Notes Corrections deadline | July, 4 UPDATED | TBD | |

| Camera-ready submission deadline | July, 11 UPDATED | EasyChair | |

| Workshop IberLEF @ SEPLN 2022 | September, 20 | IberLEF |

Registration

To register, please fill in the registration form:

Awards

TBD