Evaluation will be done by comparing the automatically generated results to the results generated by manual annotation of experts.

Evaluation metrics

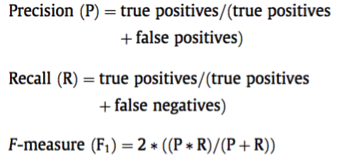

The primary evaluation metric for the sub-track 1 (DisTEMIST – entities) and sub-track 2 (DISTEMIST – linking) will consist of micro-averaged precision, recall and F1-scores:

The used evaluation scripts together with a Readme file with instructions are available on GitHub to enable systematic fine-tuning and improvement of results on the provided training data using by participating teams.