Evaluation Setting

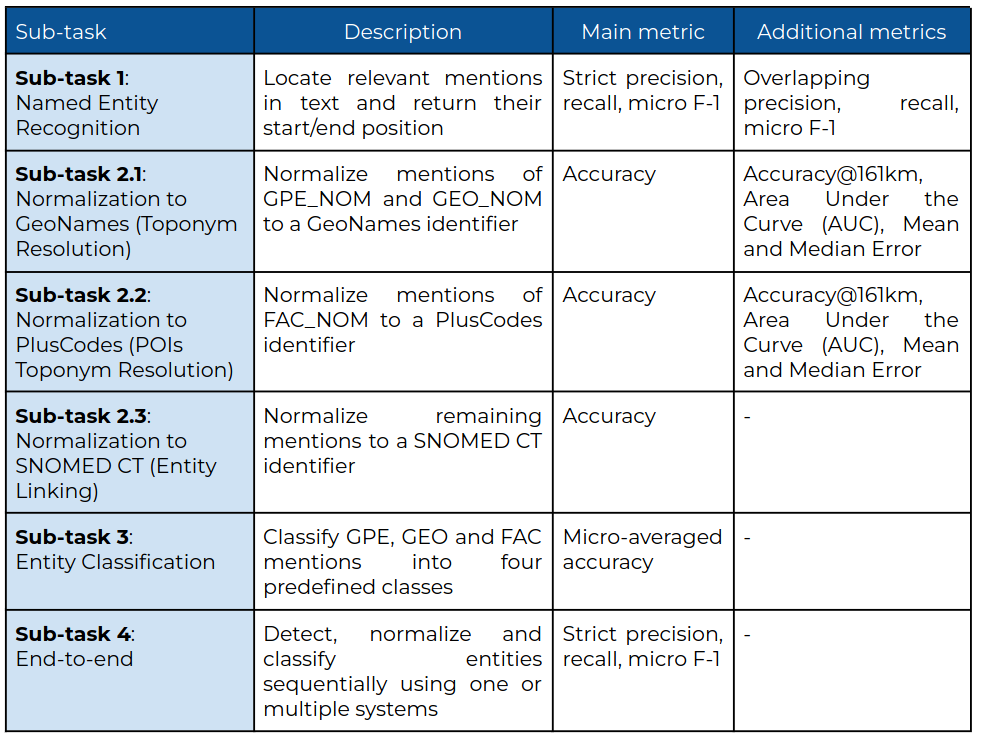

Evaluation will be done by comparing the automatically generated results to the results generated by manual annotation of experts. The task uses a mix of traditional performance-based metrics (such as accuracy and F-score) as well as distance-based metrics (Accuracy at 161 km, AUC). This is a summary of the different tasks and their evaluation metrics, taken from the MEDDOPLACE Task Guide:

The evaluation will be done via CodaLab, with the official scoring script also available for local evaluation. More info on Evaluation Library.