Metrics and Examples

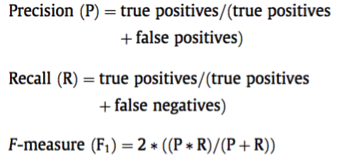

SympTEMIST’s evaluation will be done by comparing the automatically generated results to the results generated by manual annotation of experts. Similarly to the DisTEMIST and MedProcNER shared tasks, the primary evaluation metric for both sub-tracks will consist of micro-averaged precision, recall and F1-scores:

An evaluation library will be made available on GitHub to enable systematic fine-tuning and improvement of results on the provided training data using by participating teams.