Evaluation

The evaluation will be conducted by comparing the automatically generated outputs with the expert-annotated gold standard, ensuring a robust assessment of system performance.

Evaluation metrics

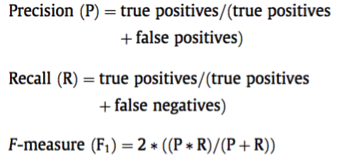

The primary evaluation metric for the ToxNER (Subtask 1) and ToxUse (Subtask 2) will consist of micro-averaged precision, recall and F1-scores:

Evaluation library

The evaluation scripts, along with a README file containing detailed instructions, are available on GitHub to facilitate systematic fine-tuning and performance improvement on the provided training data by participating teams.

You can run the script using:

$> python main.py -g ..\ground_truth\gs.tsv -p ..\dummy_submission\test_submission.tsv -s ner