- READ-BioMED: https://github.com/READ-BioMed/socialdisner-2022

- PLN-CMM: https://github.com/plncmm/socialdisner

- NLP-CIC-WFU: https://github.com/ajtamayoh/NLP-CIC-WFU-Contribution-to-SocialDisNER-shared-task-2022

- RACAI: https://github.com/racai-ai/RNER

- SINAI: https://huggingface.co/chizhikchi/Spanish_disease_finder

- ITAINOVA: https://github.com/ITAINNOVA/SocialDisNER

Description of the Corpus

Training and validation (annotated), test and background (unannotated) datsets

The SMM4H-Spanish corpus is a collection of 10,000 health-related tweets in Spanish annotated with disease mentions by a medical expert following carefully designed annotation guidelines proven to be useful to annotate both literature (clinical case reports) as well as EHRs. The aim of the corpus is to extract a diversity of different disease mentions from social media to enable further characterizing health-related issues of practical importance.

The data of the corpus was obtained from a Twitter crawl focussing on selected accounts covering patient associations and organizations, healthcare institutions and professionals as well as their followers with the aim to enrich this social media content to retrieve healthcare relevant tweets . This crawl was further filtered to obtain only the tweets that were written in Spanish with particular emphasis (but not exclusive) to profiles located in Spain and some Spanish speaking countries.

The corpus was primarily annotated by medical experts in an iterative process that included the adaptation of medical document annotation guidelines specifically for this task. These guidelines will be publicly released together with the SocialDisNER corpus.

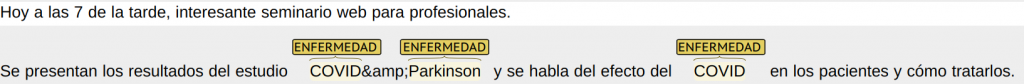

The annotation process was performed using the web-based tool brat. Below is an example of how the annotated tweets look like:

All in all, 10,000 tweets were annotated. They were split into 60% training (6,000), 20% development (2,000) and 20% test (2,000). The different splits will be released according to the track schedule and accesible on zenodo.

FORMAT

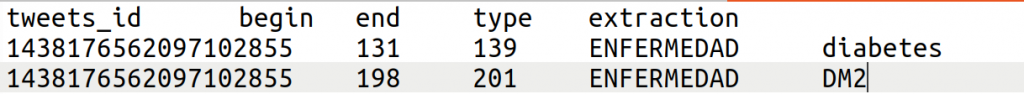

SocialDIsNER: Tweet disease mention detection. Annotations are stored in a tab-separated file with 5 columns:

tweet_id begin end type extraction

Datasets

Train set

The train set contains 5,000 annotated tweets. Will be published on zenodo.

Validation set

The validation set contains 2500 annotated tweets. Will be published on zenodo.

Test and background sets

The test set contains 2500 tweets. The background set contains 50K tweets. Will be published on zenodo.

The test and background set will be published together. You will have to submit predictions for the whole set, but you will only be evaluated with the test set `predictions.

Test set with Gold Standard annotations

The Gold Standard annotations of the test set will be released after the submission deadline

Corpora Stats.

| Training | Development | |

| # Tweets | 5000 | 2500 |

| # characters | 1253431 | 516768 |

| # tokens | 211555 | 84478 |

| Avg. char. /tweet | 250.69 | 206.71 |

| Avg. Tok. /tweet | 42.31 | 33.79 |

| # disease mentions | 15173 | 4252 |

| # unique disease mentions | 4407 | 1413 |

Additional Resources

Evaluation Script

- Official evaluation script: TBD

Linguistic Resources

- CUTEXT. See it on GitHub.

Medical term extraction tool.

It can be used to extract relevant medical terms from tweets. - SPACCC POS Tagger. See it on GitHub.

Part Of Speech Tagger for Spanish medical domain corpus.

It can be used as a component of your system. - NegEx-MES. See it on Zenodo and on GitHub.

A system for negation detection in Spanish clinical texts based on NegEx algorithm.

It can be used as a component of your system. - AbreMES-X. See it on Zenodo.

Software used to generate the Spanish Medical Abbreviation DataBase. - AbreMES-DB. See it on Zenodo.

Spanish Medical Abbreviation DataBase.

It can be used to fine-tune your system. - MeSpEn Glossaries. See it on Zenodo.

Repository of bilingual medical glossaries made by professional translators.

It can be used to fine-tune your system. - Occupations gazetteer. See it on Zenodo.

A gazetter of occupations extracted from a set of terminologies (DeCS, ESCO, SnomedCT and WordNet) and Stanford CoreNLP.

Word embeddings

- FastText Spanish medical embeddings. See them on Zenodo.

Word and subword embeddings trained for medical Spanish domain.

It can be used as a component of your system. - FastText Spanish Twitter embeddings. See them on Zenodo.

Word and subword embeddings trained with Spanish Twitter data related to COVID-19.

It can be used as a component of your system.

Baseline

More resources TBD

Annotation Guidelines

The SocialDisNER corpus of the SMM4H 2022 track was manually annotated by medical experts following the SMM4H-SocialDisNER guidelines. These guidelines were adapted from previous versions used to annotate EHRs and medical literature (clinical case reports) and contain rules for annotating mentions of diseases in health-related tweets in Spanish. Additionally, they also include some considerations regarding the codification of the annotations to SNOMED-CT concept codes.

Guidelines were created de novo in three phases:

- First, an initial version of the guidelines was adapted from clinical annotation guidelines after annotating an initial batch of ~500 tweets and outlining the main problems and difficulties of the social media data.

- Second, a stable version of guidelines was reached while annotating sample sets of the SocialDisNER corpus iteratively until quality control was satisfactory.

- Third, guidelines are iteratively refined as manual annotation continues.

The annotation guidelines are available at Zenodo.