Important Update: The deadline for result submission has been extended to 4 June at 22:00 CET.

If you had issues in uploading your submission please contact the organizers (Miguel Rodríguez, Eduard Rodríguez, and Martin Krallinger) immediately. It seems the submission platform experienced some issues the final submission hour.

Evaluation

The evaluation will be done against a set of pairs of full-text clinical case reports and their human-generated summary with the same characteristics as the provided training documents. Two main metrics will be used: Rouge-L-Sum and BERTScore. Additional metrics not officially implemented for evaluation purposes might be also used.

Submission Instructions

Important information for MultiClinSum participants regarding submission instructions, please read carefully.

1. MulticlinSum Subtracks

Each sub-track of the MultiClinSum task is independent in the sense that submissions can be done independently for any of the four sub-tracks or languages. It is NOT mandatory to generate predictions or submissions for all languages, thus teams can also generate predictions only for a single language. Also important, for a given language, it is mandatory to generate predictions for all cases in the test set, rather than just a subset.

Specifically, the four sub-tracks are:

- MultiClinSum-en: Clinical case summarization for content in English.

- MultiClinSum-es: Clinical case summarization for content in Spanish.

- MultiClinSum-fr: Clinical case summarization for content in French.

- MultiClinSum-pt: Clinical case summarization for content in Portuguese.

For the submission of your predictions or runs, make sure you have correctly specified the corresponding target language. In order to do so follow the predefined naming convention for your submissions.

2. MulticlinSum file submission naming convention

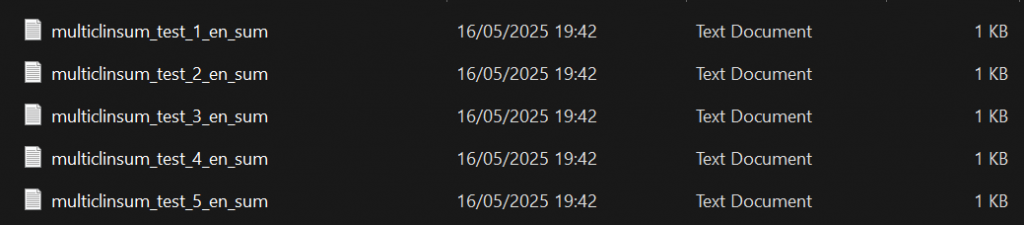

In order to make sure that the correspondence between generated summaries and full case reports is clear and unambiguous, the files corresponding to the summary of a given full-text clinical case report in the test set need to be identified with the extension “_sum.txt”, following the naming convention already provided in the training examples.

For instance, for a the given English case report, denoted in the test set as multiclinsum_test_1_en.txt, the corresponding summary file name should be multiclinsum_test_1_en_sum.txt.

So, for a case report with index i and language lang:

multiclinsum_test_{i}_{lang}.txt

The generated txt file should be:

multiclinsum_test_{i}_{lang}_sum.txt

3. Number of allowed runs per sub-track

For each sub-track (i.e. language) a total of 5 versions or runs are allowed. For instance, in a submission to MultiClinSum-en subtask a total of 5 different predictions for the entire test set can be submitted to the submission page. They will be evaluated independently and only the best will be selected for the leaderboard.

You can also send only a single run, 2 runs, 3 runs, 4 runs or 5 runs in total. It is not required to send a total of 5 runs, we allow a total of 5 runs in case the participating team would like to try out different approaches, methods or settings.

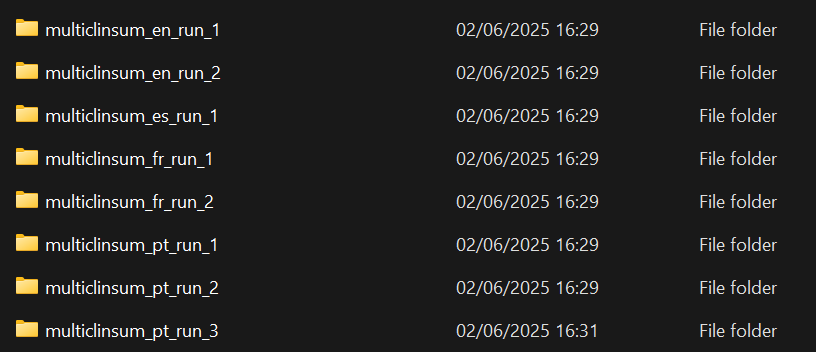

In order to send the predictions of a given sub-track and run, place the generated summary files into a single directory (following the naming conventions for generated summaries specified above). For us to identify each sub-track/run combination correctly, add the language and the run number to the directory name.

An example submission of 5 runs for the English MultiClinSum-en sub-track should contain the following folders each of them with the generated summaries:

- multiclinsum_en_run_1 (corresponding to run 1)

- multiclinsum_en_run_2 (corresponding to run 2)

- multiclinsum_en_run_3 (corresponding to run 3)

- multiclinsum_en_run_4 (corresponding to run 4)

- multiclinsum_en_run_5 (corresponding to run 5)

So, for any submission from team run r and language lang the directory name convention will be:

multiclinsum_{lang}_run_{r}.

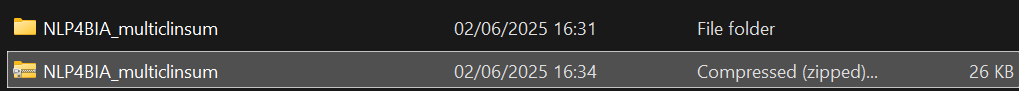

Hence, you can submit up to 20 directories (assuming you use 5 runs for each of the 4 sub-tracks). You will place your final sumbissions collection into one folder that identifies you team group. Namely, the parent folder for a group with team name {team_name} should be:

{team_name}_multiclinsum

This parent directory should in turn be compressed into a zip file, and will be the submission you will deliver. Please note that, since the BioASQ submission system only allows one single .zip file to be submitted, all the selected sub-tracks with their respective runs must be compressed into the same file.

For the sake of clarity, this is how your submission would look like if you submitted 1 run for the Spanish sub-track, 2 runs for the english and french sub-track and 3 for the Portuguese sub-track:

– Files (for the first run of the english sumbission):

-Folders:

-Zip file:

4. Test set summary prediction file format

The format of the test set prediction corresponds essentially to a simple plain text file of the generated summary, as was the case of the training set examples. You are responsible to make sure that the file can be correctly read using utf-8 decoding standard.

5. Size of test set summary predictions texts

The test set submission you upload should not be bigger than the actual full text case reports, as they correspond to summaries. Thus as a sanity check, we recommend that you cross check that the summaries you return are not larger than the actual full clinical case reports.

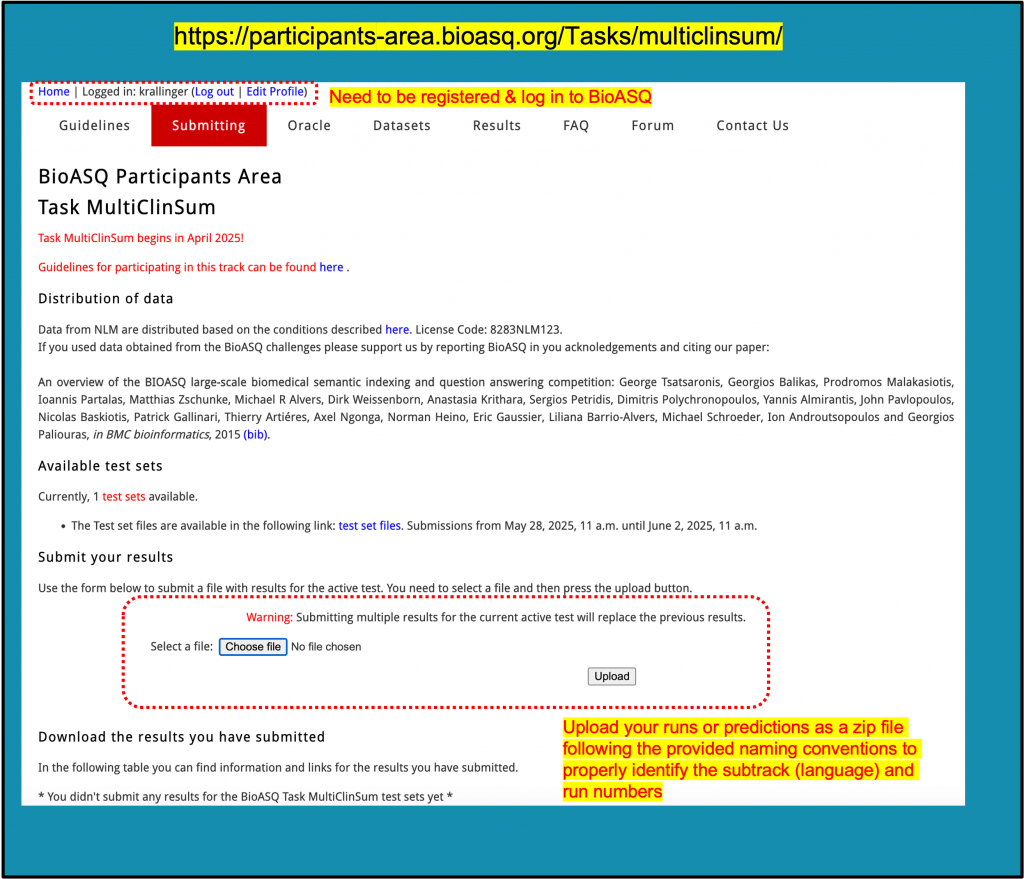

6. Upload submissions at BioASQ platform

IMPORTANT: in order to upload your submissions or runs for the task you need to be registered at BioASQ. Once you are registered you can go to the submission page of BioASQ and upload your predictions. Remember they need to be in a zip folder and follow the naming conventions and details provided above.

Use this BioASQ Submission link to submit or upload your predictions.

Screenshot of submission webpage at BioASQ platform.