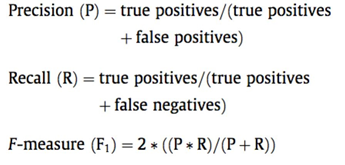

Evaluation will consider the classical entity-based or instanced-based evaluation that requires that system outputs match exactly the beginning and end locations of each entity tag, as well as match the entity annotation type of the gold standard annotations. The evaluation metrics will consist of micro-averaged precision, recall and F1-scores.

Evaluation scripts, proper documentation and annotation guidelines will be made available on GitHub to enable evaluation tools source code local testing by participating teams. Documentation on the Evaluation Metrics applied will be made available. Metrics from Literature on NE tasks regarding recognition and classification will be used.